When Schools Don’t Have an AI Plan: How We Can Start Doing Something About It

- Oct 20, 2025

- 5 min read

A little while back, I posed a straightforward question to my friend, who happens to be a school principal: “Does your school have an AI working group, or is there any team exploring the best ways to incorporate AI into learning?”

The answer was short and surprising: No.

Not only does the school lack such a group, but the entire district—the one ranked among the best in the state—has no formal initiative addressing AI in education. This district has excellent teachers, engaged parents, and solid resources. Yet when it comes to artificial intelligence, there is no strategy, no coordination, and no shared vision.

It’s the educational Wild West, left to individual teachers to navigate on their own—adding one more challenge to their already full plates.

And that should concern us all.

Children Are Already Using AI — Often Better Than Adults Think

Whether schools are ready or not, AI has already entered the classroom. Middle school students are using ChatGPT to write essays, generate project ideas, or summarize long texts. Some even use it to explain concepts they didn’t fully grasp in class.

Teachers, understandably worried about plagiarism, are trying to detect or block it. But in doing so, many schools are missing the deeper point: AI is not going away.

Students will continue to use it—at home, at school, and later in the workplace. If we don’t guide them, they’ll learn from TikTok tutorials and online forums instead. And that means they’ll learn how to hide AI use, not how to use AI responsibly.

The result? We risk raising a generation of students who are brilliant at bypassing detectors—but poor at learning, questioning, and thinking critically.

The Real Issue: A Leadership and Policy Gap

This isn’t simply a technology gap. It’s a leadership gap.

AI in education is not just about adding tools; it’s about rethinking what and how we teach. Yet most schools have not built structures for that conversation.

A few teachers experiment with tools like ChatGPT, Canva Magic Write, or Khanmigo. Some administrators attend conferences or webinars. But without a coordinated framework, each classroom becomes an isolated experiment.

What’s missing is institutional leadership—a shared policy that sets direction, provides training, and ensures ethical, inclusive implementation.

And it’s not just schools. At the ministry level, the uncertainty is even more visible. In many countries, the default solution has been the simplest one: just ban it. Banning AI tools in classrooms may look like decisive action, but in reality, it’s a way to postpone hard decisions.

A ban doesn’t solve the underlying challenge — it only pushes it forward in time. Students will still use AI outside school hours, teachers will still explore it privately, and education systems will still fall behind. Real leadership means creating conditions for safe, ethical exploration, not enforcing silence.

The absence of policy isn’t neutrality — it’s a policy of avoidance.

Why Every School Needs an AI Working Group

The first step is simple, but powerful: create an AI working group.

This doesn’t need to be a bureaucratic committee or a team of technical experts. In fact, it works best when it includes:

Teachers who understand classroom realities

Students who represent how AI is actually being used

Parents who bring community perspectives

Administrators who can turn ideas into policy

Such a group can meet monthly to explore three key questions:

How is AI currently being used—by teachers and by students?

What opportunities does AI create for deeper, more personalized learning?

What ethical and pedagogical risks must we anticipate and manage?

This small but focused collaboration can help the school move from reactive to proactive—transforming scattered initiatives into coherent practice.

Building an AI Policy Framework: Four Pillars

From my experience working with schools and educators, an effective AI strategy in education rests on four core pillars:

1. Pedagogical Integration

AI should not replace the teacher—it should extend their reach. Schools need to identify where AI genuinely supports learning goals:

Supporting differentiated instruction

Generating feedback and formative assessment

Enhancing creative writing, design, and inquiry-based learning

Helping students practice languages or coding through dialogue

Every teacher should have access to practical examples and training—not to make them “AI experts,” but to help them teach better with AI.

2. Ethical and Responsible Use

AI literacy is as essential as digital literacy once was. Students should learn:

How AI systems generate content

The limits and biases of these systems

When and how to cite AI assistance

Why transparency and responsibility matter

This isn’t just a tech lesson—it’s a citizenship lesson.

3. Teacher Support and Capacity Building

Expecting teachers to figure this out alone is unfair. Schools should allocate time and resources for AI professional learning. That includes:

Regular workshops or “AI cafés” to share classroom practices

Access to vetted, educationally sound tools

Clear guidelines on privacy and data protection

When teachers feel supported, they become innovators rather than gatekeepers.

4. Community Engagement

Parents and the wider community must be part of the conversation. They need reassurance that AI in learning isn’t about replacing human relationships—but about strengthening them through smarter tools.

Transparent communication builds trust—and trust builds innovation.

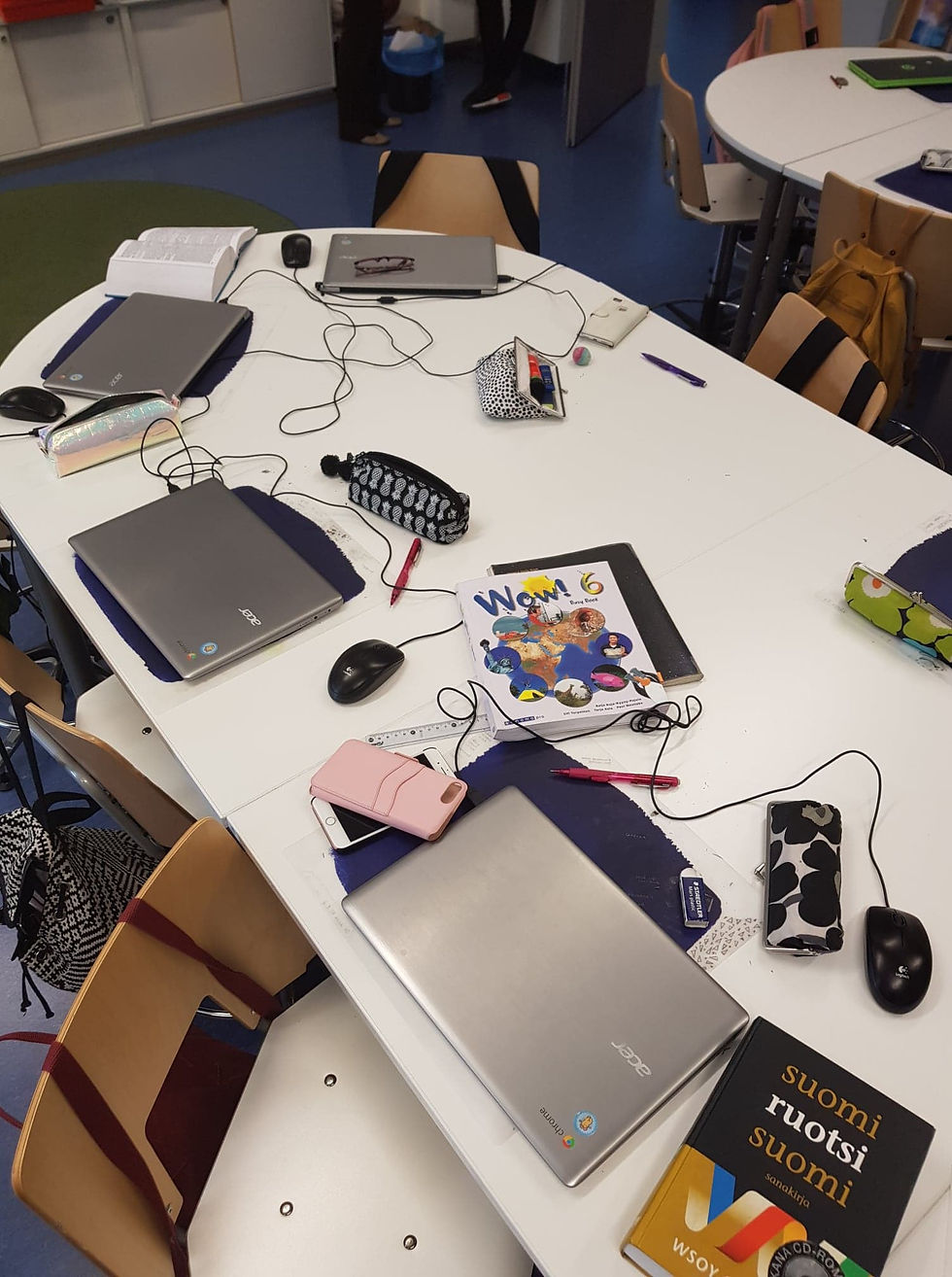

Learning from Finland and Other Human-Centered Models

In Estonia, Finland, and across parts of Scandinavia, the integration of digital tools has always been guided by pedagogy first, technology second. The same principle applies to AI.

Rather than rushing to adopt every new tool, Estonian educators start by asking:

What learning process do we want to improve?

How can technology support reflection, curiosity, and collaboration?

This human-centered mindset—rooted in trust, autonomy, and professional learning—is what other education systems urgently need to rediscover in the age of AI.

A Call to Action: Start the Conversation

No school can afford to wait for a perfect policy or a national framework. The pace of AI development is simply too fast.

But every school can start small:

Form a cross-functional AI working group.

Map current practices and risks.

Identify one or two pilot projects where AI could genuinely enhance learning.

Share results openly, so others can learn and build upon them.

Even modest steps can create powerful momentum. What matters most is not technical sophistication, but the willingness to engage.

The Future Belongs to the Prepared

AI is not a threat to education—unless we ignore it.

Handled wisely, it can help teachers personalize learning, empower students to think critically, and prepare a new generation to live and work responsibly in an AI-driven world.

Handled poorly—or not at all—it will widen gaps, confuse ethics, and reduce learning to a race between humans and machines.

The choice is ours.

And it starts with a single, simple question:

“Who in our school is thinking about AI—and how can we make sure everyone has a voice in that conversation?”

Comments